Enhancing Large Language Models with Public RAG Models

Introduction

Large language models (LLMs) have an insatiable appetite for information, similar to humans. The ability to absorb and reason with knowledge is what sets these models apart. Consequently, the best-performing LLMs are typically those with a larger number of parameters, which translates to a more extensive knowledge corpus and enhanced reasoning capabilities. In this article, we explain our recent work on improving the performance of various models, especially the small ones, by injecting external knowledge while maintaining their reasoning abilities.

Currently, the GPT model is the most widely adopted LLM due to its state-of-the-art performance across various evaluation methodologies. However, relying on a closed model comes with several drawbacks. Utilizing an LLM cloud service necessitates sharing data with the service provider and giving up on control over sensitive information. Furthermore, it creates a dependency on internet connectivity. An alternative approach is to run open-source models locally, but even large open-source models often underperform compared to their closed counterparts. Furthermore, both open and closed-source models are lacking up to date information due to being limited in their training data. So, how can we bridge this performance gap without incurring significant development costs? The answer lies in Retrieval-Augmented Generation (RAG). By injecting entire public knowledge into LLMs via public RAG models, we can enhance their performance while closing the gap between small and state-of-the-art models without replicating the high costs associated with large models.

In that manner, at Dria, by transforming public knowledge into public RAG models, we are enabling LLMs to achieve better performance and access up-to-date information at the lowest possible cost. These public RAG models are freely and perpetually available for everyone to use, even locally, ensuring permissionless access to knowledge. Rather than relying on unverified and biased sources, Dria enables the creation of public RAG models through human collaboration and verification, providing truthful and unbiased knowledge repositories for AI.

Data

Retrieval Data Preparation

We have released an index from the entire Wikipedia(EN) by using a dataset from Huggingface. Dense retrieval is extremely scalable because it deals with a single representation for a large text chunk. However, retrieval performance significantly degrades when these text chunks have multiple/long contexts and/or details. To address this, a certain amount of chunking is necessary to increase granularity for better retrieval. Thus, we embedded full articles rather than just titles. Furthermore, to ensure the accuracy of retrieval we performed semantic chunking of articles for creating coherent blobs of text and summarized extensive chunks. Highlights of the retrieval index:

- Created a search index with 6M embeddings of Wikipedia articles (English)

- Embedded entire articles, not only titles with bge-large-en-v1.5 **

- Summarized Long articles for better retrieval

- Enabled combining vectors and keywords for precise results.

- Free, perpetual availability on decentralized storage.

- All available at Dria CLI:

dria serve

Dria CLI enables pulling an entire index from Dria to your local device and query for information retrieval easily. Since it runs on your disk, it leaves the RAM space for language models.

Evaluation Dataset & Models

We evaluated multiple LLMs + Wikipedia with a validation partition of HotpotQA, a dataset with 113k Wikipedia-based question-answer pairs for evaluation.

Our work uses a multi-threaded approach to evaluate different models on the HotpotQA dataset concurrently. We used the GPT-as-Judge model for the evaluation information retrieval of the LLMs listed below:

- Mixtral8X7B

- Command R

- Meta Llama 70B

- Meta Llama 13B

The HotpotQA dataset is loaded and split into rows, with each row being evaluated concurrently by a worker thread.

We designed it to be run from the command line, with options for specifying the maximum number of worker threads for concurrent model evaluation, the directory where the evaluation results will be saved, and the percentage of the HotpotQA dataset to be used for evaluation.

The Evaluation Methodology

Initially, we analyzed vanilla model responses, assessed by Judge LLM. Next, we extracted context for each question using Dria, then calculated similarity scores. Questions below the threshold were excluded from the evaluation cluster.

For included questions:

- Context is retrieved from the Local Wikipedia Index using Dria.

- Context is split into smaller chunks.

- Select two chunks with maximum number of shared keywords.

The context is then injected into the prompt for RAG. The response generated by the RAG model and vanilla model is then evaluated by GPT 4.5 to determine if the response aligns with the correct answer.

Evaluation Results

To investigate how the Retrieval-Augmented Generation (RAG) approach can enhance the performance of large language models (LLMs), we employed a methodology where we compared the answers of vanilla models and models augmented with external information retrieved for the given question. For the retrieval component, we utilized the English Wikipedia index from Dria, as described in the previous section. Since the index contains extensive chunks from Wikipedia articles, feeding wide context to small models may lead to confusion. Therefore, we performed a keyword search on the retrieved context. For each query, before injecting the context into the prompt, we parsed the retrieved context into chunks and conducted a keyword search for each chunk using the initial query. This approach allowed us to capture the entire related context during retrieval and then distill it down to only the most relevant portion. By doing so, we observed a significant improvement in the reasoning capabilities of small models and prevented them from getting overwhelmed by excessive context. Since we employed a simple RAG approach and keyword searches, we significantly reduced inference time and cost by making a single call to the LLM.

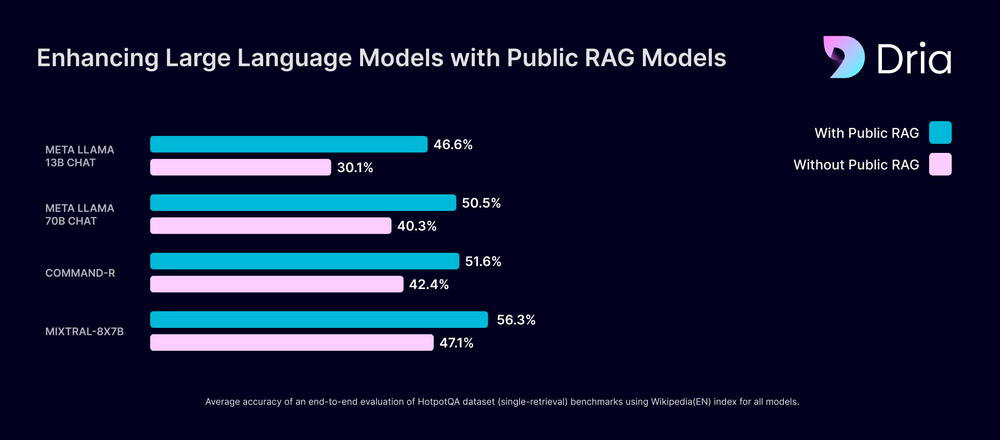

While prompting LLMs to obtain answers for the query, both with and without context, we employed the Chain of Thought methodology to ensure better reasoning performance. As demonstrated in Figure 1, we achieved a 7% to 16% improvement in the performance of various models. When examining the results without RAG, the gap between the best-performing model and the worst-performing model was 17%, while with RAG, this gap decreased to 9.7%. This finding proves that RAG is not only a cost-effective way to improve LLMs but also an effective approach for bridging the performance gap between different models.

.png)

Conclusion

Our findings once again demonstrate that RAG is a promising method for providing large language models (LLMs) with access to up-to-date and expanded knowledge, thereby ensuring better performance. For the work we have conducted thus far, you can find all the details, replicate the experiments, or further contribute to this research via our public repository. As a next step, we are planning to employ a novel methodology where we can leverage multiple public RAG models collaboratively built on Dria at a single query. This approach offers an opportunity to improve LLMs across different domains by utilizing various indexes as a single source of knowledge.

You can explore all the available models on Dria from the Knowledge Hub and benefit from our documentation to learn how to utilize them.